Introduction

Data has emerged as a valuable asset in the digital world. As data collection, storage, and analysis helps organizations make informed business decisions, efficient storage of massive amounts of data is imperative for businesses. While various big data file formats are available to support efficient storage, a complementary big data table format can further optimize the benefits for organizations to achieve an information advantage.

Common Big Data Challenges and Format Considerations

As the volume of data that enterprises manage continues to grow, the need to query the data in a fast and efficient way becomes critically important. Additionally, the efficiency of querying large volumes of data is a considerable factor as well. Columnar databases and data stores are becoming major players, especially for analytical query systems that depend heavily on data warehouses, data lakes and more recently, on data lakehouse solutions.

Columnar databases offer the ability to query vast amounts of data within seconds and achieve optimal analytical performance. However, their architecture presents a significant challenge to data ingestion when managing data involving frequent updates and deletions, like medical or financial records. While Columnar databases cannot match the real-time data ingestion or write performance of transactional databases, storing data values of the same type side-by-side in columnar storage results in far more efficient compression, lowered storage costs and faster read or query performance as skipping over extraneous data while querying columnar databases leads to faster aggregation queries compared to row-based formats.

The key difference between columnar and row-based storage is how the data is natively stored on disk. In row-oriented databases, the entire row of data is stored in a block, while columnar databases store values of each column in the same block. The format used for storing data has a significant impact on both the data read and data write use cases.

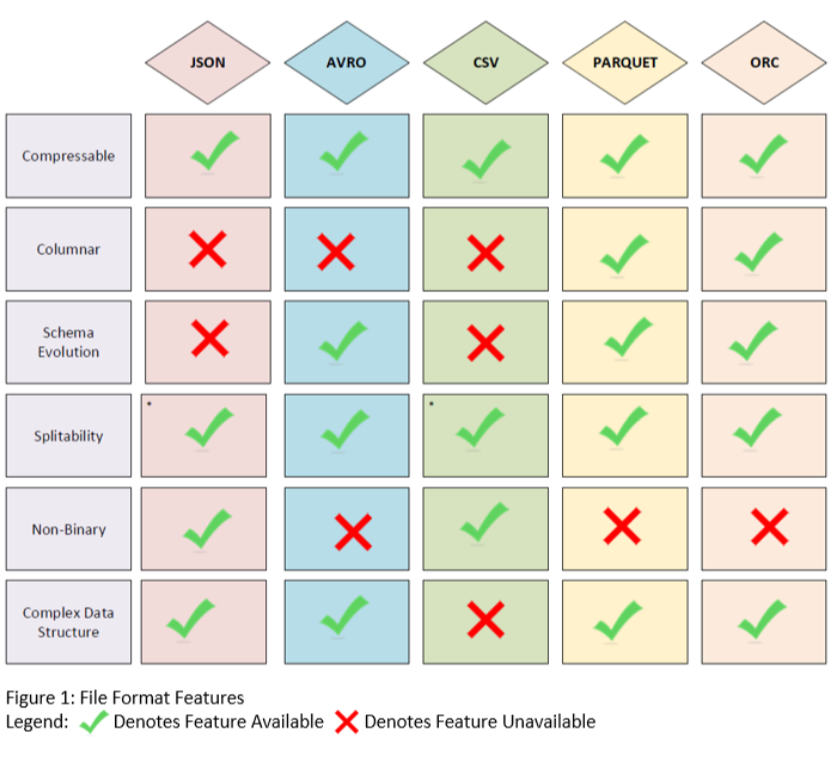

Benefits and Comparison of Various Open Data Formats

As organizations consider cloud native data storage for big data solutions, they have options in terms of the data file formats used for storage. Traditional file formats have included text files and csv based formats; however, a wider array of options now exist, including the following:

Parquet: Apache Parquet is a binary columnar file format designed to facilitate very efficient compression and encoding schemes. Using Parquet may reduce storage costs for data files and maximize the effectiveness of querying data with serverless technologies such as Amazon Redshift Spectrum, Amazon Athena, Azure Data Lakes, and Google’s BigQuery.

ORC: Optimized Row Columnar is a binary columnar file format that contains clusters of row data called stripes. Each stripe houses an index, row data, and a footer. The key statistics contained in the data stripes can hasten inquiries.

AVRO: Apache AVRO is a binary row based file format that can be described as a data serialization system. The features of this format facilitate schema evolution allowing old and new systems to seamlessly read and exchange data.

Text/CSV: Comma-Separated Values is a row based non-binary file format used to exchange tabular data between systems. Each row of the file is a row in the table. Data in the CSV file type is easy to edit. The simple scheme facilitates ease of use.

JSON: JavaScript Object Notation is a non-binary row based file format that permits users to store data in hierarchical configuration. The compact size of this format allows it to be regularly used in network communication.

The Value of Open Table Formats for Big Data

Open Table Formats (OTFs) are specifications designed to enhance the way data is stored, accessed, and managed in large-scale data processing and analytics environments that use different open data formats for storage. While columnar and row-based formats pertain to data storage, open table formats are more focused on efficient data management and improved interoperability across different data processing engines and data storage formats.

Write operations (e.g., inserts, updates, deletes) on data in object stores such as Amazon S3 and Azure Blob Store have always posed a challenge to data engineers. OTFs such as Apache Hudi and Apache Iceberg address this problem by adding a metadata layer that manages, organizes and tracks the data stored in the underlying object store and enables full transactional capabilities comparable to relational databases. A comparison of the two is as follows:

Hudi: Apache Hudi is a data management framework that focuses on stream processing and incremental data processing. It supports real-time and batch processing on top of Hadoop Distributed File System (HDFS), cloud stores, and cloud data lakes. Hudi is designed to simplify data ingestion, provide extensive data integration capabilities, and support real-time querying and batch querying on top of a single storage layer.

Iceberg: Apache Iceberg is a table data format that’s designed to simplify table schema evolution while also supporting fast data access. It provides support for multiple data engines including Spark, Presto, and Hive. Iceberg supports column-based storage, making it optimal for analytical query systems. It also supports transactions, data versioning, and partition pruning, making it flexible and easy to use.

Benefits of Combining an Open Data Format and Open Table Format

The overall objective of big data processing systems is to support both fast ingestion (writes) and querying (reads) while effectively managing storage costs. Using open data formats with open table formats in large object stores offers the following benefits:

Interoperability: Data can be easily shared and used across different software applications and platforms without proprietary software or formats that may create compatibility issues.

Accessibility: Typically well-documented and supported, open data file/table formats make it easier for users to access and manipulate data without relying on specific software or vendors.

Data Preservation: Independent of specific software or vendors, there is less risk of data becoming inaccessible due to discontinued software or proprietary file formats.

Collaboration: Open and widely supported data file and table formats make it easier for different stakeholders to work together on data analysis, research, or other shared initiatives.

Flexibility and Innovation: Developers and researchers can use open formats to create new tools, visualizations, or applications that enhance data analysis and decision-making processes.

An enterprise solution that utilizes these complementary technologies through a hybrid approach optimizes big data operations as a pivotal capability in the organization’s data strategy. The Multiplatform Data Acquisition, Collection, and Analytics (MDACA) data fabric suite supports this hybrid approach to maximize performance, reduce data storage and processing costs, and simplify data management while supporting data governance principles of data quality and data security.

Conclusion

Our MDACA data lake technology supports the open data file formats and open data table formats discussed above and more. As most of our customers focus on the cost reduction of efficient data storage with an emphasis on analytics, a combination of an open data file format such as Parquet and open data table format (e.g., Hudi or Iceberg) provides an optimal solution. Additionally, our Big Data Virtualization technologies fully integrate and support a wide range of data file and data table formats to further connect and optimize the digital infrastructure, tailored to an organization’s specific needs.