Jim Martin

Business Dev Director

SpinSys

Crystal Johnson

Project Analyst

SpinSys

Introduction

In today’s world, data is becoming an increasingly valuable asset. The collection, storage, and analysis of data is used to make informed business decisions. Among these decisions is determining the most effective way to store data. There are various types of big data file formats that allow the efficient storage of mass amounts of data.

Columnar vs Row Based Storage

As the volume of data that an enterprise organization manages grows, the need to query the data in a fast and efficient way also grows. Additionally, the efficiency of querying large volumes of data is a considerable factor as well. Columnar databases and data stores are becoming major players, especially in analytical query systems as data warehouse solutions.

The theory behind columnar vs row based storage is about how data is stored on disk. A row oriented database tries to store the whole row of the database in the same block, but columnar databases store the values of the columns in the same block. This storage style difference translates to performance and varying use-cases based on individual business needs and objectives.

Columnar databases provide the ability to query massive amounts of data in a matter of seconds, and offer optimal analytical performance. However, their underlying architecture introduces a considerable caveat: data ingestion. They offer poor performance in a system that has large volumes of data that updates and deletes as part of the data ingestion, ie. medical or financial records. They can’t match a transactional database’s real-time data ingestion performance which expedites the insertion of data into the system. Columnar storage is more cost effective for mass amounts of data because storing values with the same type next to each other allows for more efficient compression. The user can skip over extraneous data quickly while querying columnar databases. Consequently, aggregation queries take less time compared to row based formats.

Combining fast ingestion and querying while managing cost is the overall objective of data processing systems. Developing an architecture and enterprise solution that has a hybrid solution to support both file structures maximizes big data operations and becomes key in overall strategy. The Multiplatform Data Acquisition, Collection and Analytics (MDACA) Platform is designed to support the hybrid approach to optimize performance and cost.

File Formats

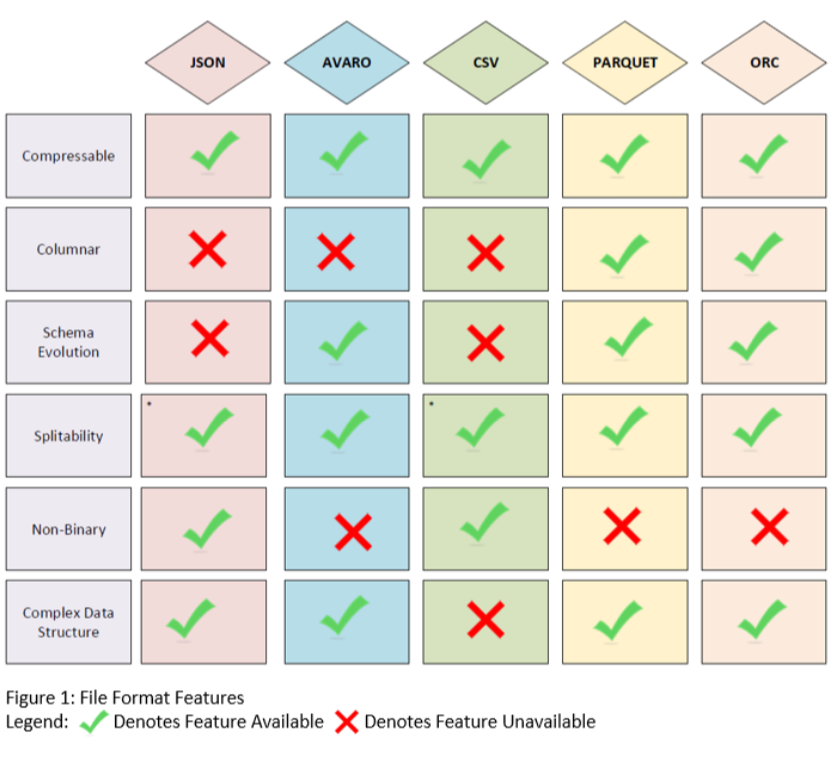

As organizations that manage big data solutions start to look at cloud native data storage, they have options in terms of the data file formats used for storage. Traditional file formats have included text files and csv based formats. Organizations now have a wider array of options including:

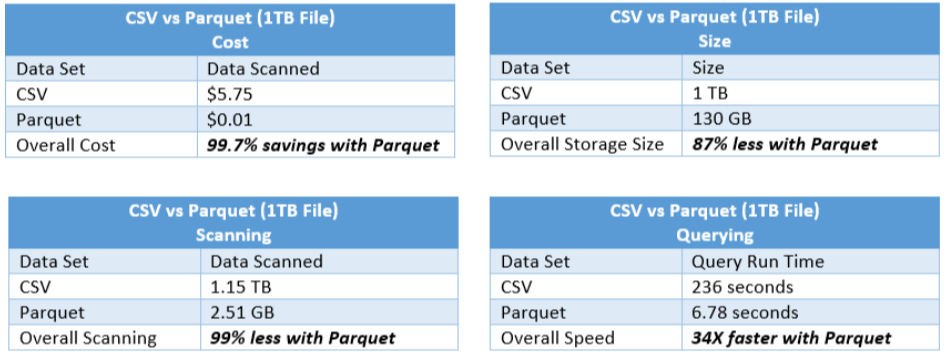

Parquet: Apache Parquet is a binary columnar file format designed to facilitate very efficient compression and encoding schemes. Using Parquet may reduce storage costs for data files and maximize the effectiveness of querying data with serverless technologies such as Redshift Spectrum, Amazon Athena, Azure Data Lakes, and BigQuery.

AVRO: Apache AVRO is a binary row based file format that can be described as a data serialization system. The features of this format facilitate schema evolution allowing old and new systems to seamlessly read and exchange data.

ORC: Optimized Row Columnar is a binary columnar file format that contains clusters of row data called stripes. Each stripe houses an index, row data, and a footer. The key statistics contained in the data stripes can hasten inquiries.

Text/CSV: Comma-Separated Values is a row based non binary file format used to exchange tabular data between systems. Each row of the file is a row in the table. Data in the CSV file type is easy to edit. The simple scheme facilitates ease of use.

JSON: JavaScript Object Notation is a non binary row based file format that permits users to store data in hierarchical configuration. The compact size of this format allows it to be regularly used in network communication.

Benefits of Using Parquet

Apache Parquet is designed to facilitate very efficient compression and encoding schemes. This file formats fast analytics and easy to manage interface make it an attractive option to store data. Parquet also offers seamless integration. Analytics engines and traditional databases such as Oracle and SQL support external data, allowing you to use the traditional tools while optimizing the cost and management of data storage. Storing data in Parquet allows users to have access to data and necessary tools in one centralized location. This reduces the need to copy data multiple times. Using Parquet may reduce storage costs for data files and maximize the effectiveness of querying data with serverless technologies such as Redshift Spectrum, Amazon Athena, Azure Data Lakes, and BigQuery.

Conclusion

As part of the MDACA Big Data Platform, our Data Lake solutions support the data file formats discussed above and more. As most of our customers focus on the cost reduction of data storage with an emphasis on analytics, we typically recommend Parquet. We can adjust based on the customer needs and requirements. Additionally, our Big Data Virtualization technologies fully integrate and support the wide range of data file storage formats, and can be tailored to support individual business needs.